python获取http代理并验证

- 发表于

- 安全工具

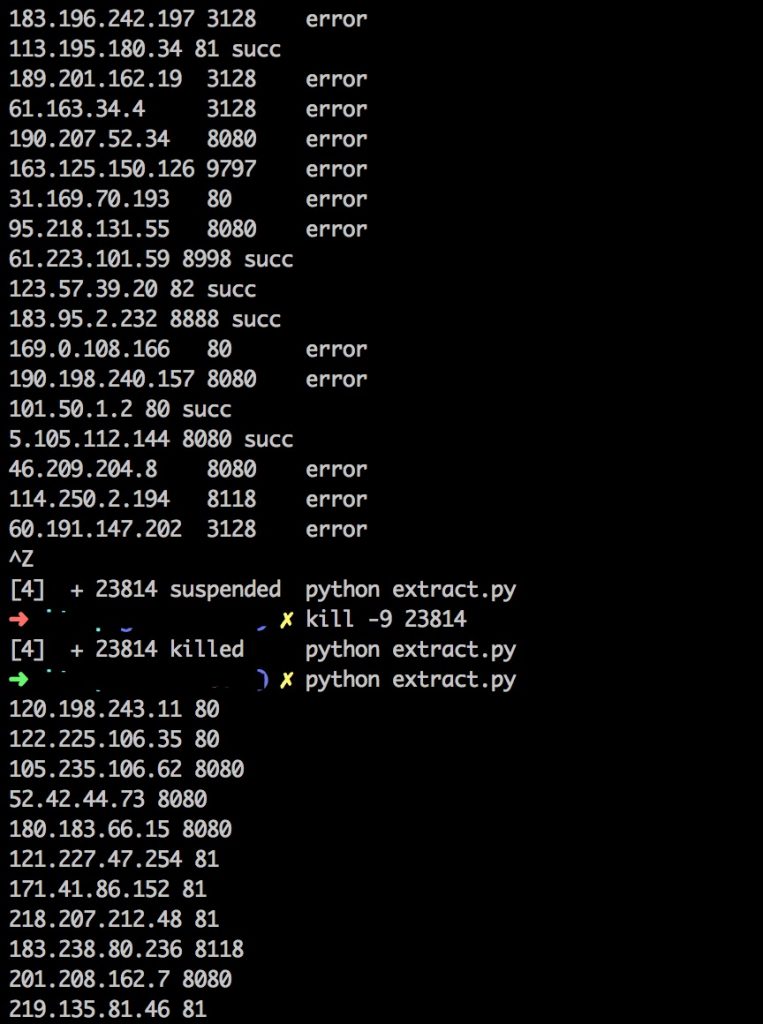

主要是从http://www.ip181.com/ http://www.kuaidaili.com/以及http://www.66ip.com/获取相关的代理信息,并分别访问v2ex.com以及guokr.com以进行验证代理的可靠性。

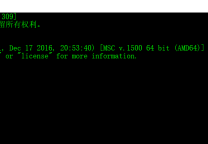

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 | # -*- coding=utf8 -*- """ 从网上爬取HTTPS代理 """ import re import sys import time import Queue import logging import requests import threading from pyquery import PyQuery import requests.packages.urllib3 requests.packages.urllib3.disable_warnings() #logging.basicConfig( # level=logging.DEBUG, # format="[%(asctime)s] %(levelname)s: %(message)s") class Worker(threading.Thread): # 处理工作请求 def __init__(self, workQueue, resultQueue, **kwds): threading.Thread.__init__(self, **kwds) self.setDaemon(True) self.workQueue = workQueue self.resultQueue = resultQueue def run(self): while 1: try: callable, args, kwds = self.workQueue.get(False) # get task res = callable(*args, **kwds) self.resultQueue.put(res) # put result except Queue.Empty: break class WorkManager: # 线程池管理,创建 def __init__(self, num_of_workers=10): self.workQueue = Queue.Queue() # 请求队列 self.resultQueue = Queue.Queue() # 输出结果的队列 self.workers = [] self._recruitThreads(num_of_workers) def _recruitThreads(self, num_of_workers): for i in range(num_of_workers): worker = Worker(self.workQueue, self.resultQueue) # 创建工作线程 self.workers.append(worker) # 加入到线程队列 def start(self): for w in self.workers: w.start() def wait_for_complete(self): while len(self.workers): worker = self.workers.pop() # 从池中取出一个线程处理请求 worker.join() if worker.isAlive() and not self.workQueue.empty(): self.workers.append(worker) # 重新加入线程池中 #logging.info('All jobs were complete.') def add_job(self, callable, *args, **kwds): self.workQueue.put((callable, args, kwds)) # 向工作队列中加入请求 def get_result(self, *args, **kwds): return self.resultQueue.get(*args, **kwds) def check_proxies(ip,port): """ 检测代理存活率 分别访问v2ex.com以及guokr.com """ proxies={'http': 'http://'+str(ip)+':'+str(port)} try: r0 = requests.get('http://v2ex.com', proxies=proxies,timeout=30,verify=False) r1 = requests.get('http://www.guokr.com', proxies=proxies,timeout=30,verify=False) if r0.status_code == requests.codes.ok and r1.status_code == requests.codes.ok and "09043258" in r1.content and "15015613" in r0.content: #r0.status_code == requests.codes.ok and r1.status_code == requests.codes.ok and print ip,port return True else: return False except Exception, e: pass #sys.stderr.write(str(e)) #sys.stderr.write(str(ip)+"\t"+str(port)+"\terror\r\n") return False def get_ip181_proxies(): """ http://www.ip181.com/获取HTTP代理 """ proxy_list = [] try: html_page = requests.get('http://www.ip181.com/',timeout=60,verify=False,allow_redirects=False).content.decode('gb2312') jq = PyQuery(html_page) for tr in jq("tr"): element = [PyQuery(td).text() for td in PyQuery(tr)("td")] if 'HTTP' not in element[3]: continue result = re.search(r'\d+\.\d+', element[4], re.UNICODE) if result and float(result.group()) > 5: continue #print element[0],element[1] proxy_list.append((element[0], element[1])) except Exception, e: sys.stderr.write(str(e)) pass return proxy_list def get_kuaidaili_proxies(): """ http://www.kuaidaili.com/获取HTTP代理 """ proxy_list = [] for m in ['inha', 'intr', 'outha', 'outtr']: try: html_page = requests.get('http://www.kuaidaili.com/free/'+m,timeout=60,verify=False,allow_redirects=False).content.decode('utf-8') patterns = re.findall(r'(?P<ip>(?:\d{1,3}\.){3}\d{1,3})</td>\n?\s*<td.*?>\s*(?P<port>\d{1,4})',html_page) for element in patterns: #print element[0],element[1] proxy_list.append((element[0], element[1])) except Exception, e: sys.stderr.write(str(e)) pass for n in range(0,11): try: html_page = requests.get('http://www.kuaidaili.com/proxylist/'+str(n)+'/',timeout=60,verify=False,allow_redirects=False).content.decode('utf-8') patterns = re.findall(r'(?P<ip>(?:\d{1,3}\.){3}\d{1,3})</td>\n?\s*<td.*?>\s*(?P<port>\d{1,4})',html_page) for element in patterns: #print element[0],element[1] proxy_list.append((element[0], element[1])) except Exception, e: sys.stderr.write(str(e)) pass return proxy_list def get_66ip_proxies(): """ http://www.66ip.com/ api接口获取HTTP代理 """ urllists = [ 'http://www.proxylists.net/http_highanon.txt', 'http://www.proxylists.net/http.txt', 'http://www.66ip.cn/nmtq.php?getnum=1000&anonymoustype=%s&proxytype=2&api=66ip', 'http://www.66ip.cn/mo.php?sxb=&tqsl=100&port=&export=&ktip=&sxa=&submit=%CC%E1++%C8%A1' ] proxy_list = [] for url in urllists: try: html_page = requests.get(url,timeout=60,verify=False,allow_redirects=False).content.decode('gb2312') patterns = re.findall(r'((?:\d{1,3}\.){1,3}\d{1,3}):([1-9]\d*)',html_page) for element in patterns: #print element[0],element[1] proxy_list.append((element[0], element[1])) except Exception, e: sys.stderr.write(str(e)) pass return proxy_list def get_proxy_sites(): wm = WorkManager(20) proxysites = [] proxysites.extend(get_ip181_proxies()) proxysites.extend(get_kuaidaili_proxies()) proxysites.extend(get_66ip_proxies()) for element in proxysites: wm.add_job(check_proxies,str(element[0]),str(element[1])) wm.start() wm.wait_for_complete() if __name__ == '__main__': try: get_proxy_sites() except Exception as exc: print(exc) |

原文连接:python获取http代理并验证 所有媒体,可在保留署名、

原文连接

的情况下转载,若非则不得使用我方内容。